Table of Contents

Introduction

In the rapidly evolving world of AI in healthcare, Google DeepMind has once again pushed the boundaries with the launch of MedGemma, a cutting-edge collection of open-source models tailored for medical text and image understanding. Built upon the powerful Gemma 3 architecture and part of the Health AI Developer Foundations (HAI-DEF) initiative, MedGemma brings generative multimodal capabilities to researchers, developers, and innovators — offering unprecedented flexibility, transparency, and performance.

What Is MedGemma?

MedGemma is a part of the Gemma family of lightweight models by Google DeepMind. It comes in three main variants:

- MedGemma 4B Multimodal – a compact model handling both text and images, such as X-rays and clinical notes.

- MedGemma 27B Text-only – a powerful model tuned for deep medical language reasoning.

- MedGemma 27B Multimodal – the largest variant, processing both textual and visual data with extended comprehension capabilities.

These models are open-source, meaning developers retain full control over privacy, infrastructure, and modifications — whether running locally or on cloud platforms like Google Cloud or Hugging Face.

(Links: Gemma – MedGemma / HAI-DEF)

Key Capabilities & Highlights

1. Multimodal Interpretation and Report Generation

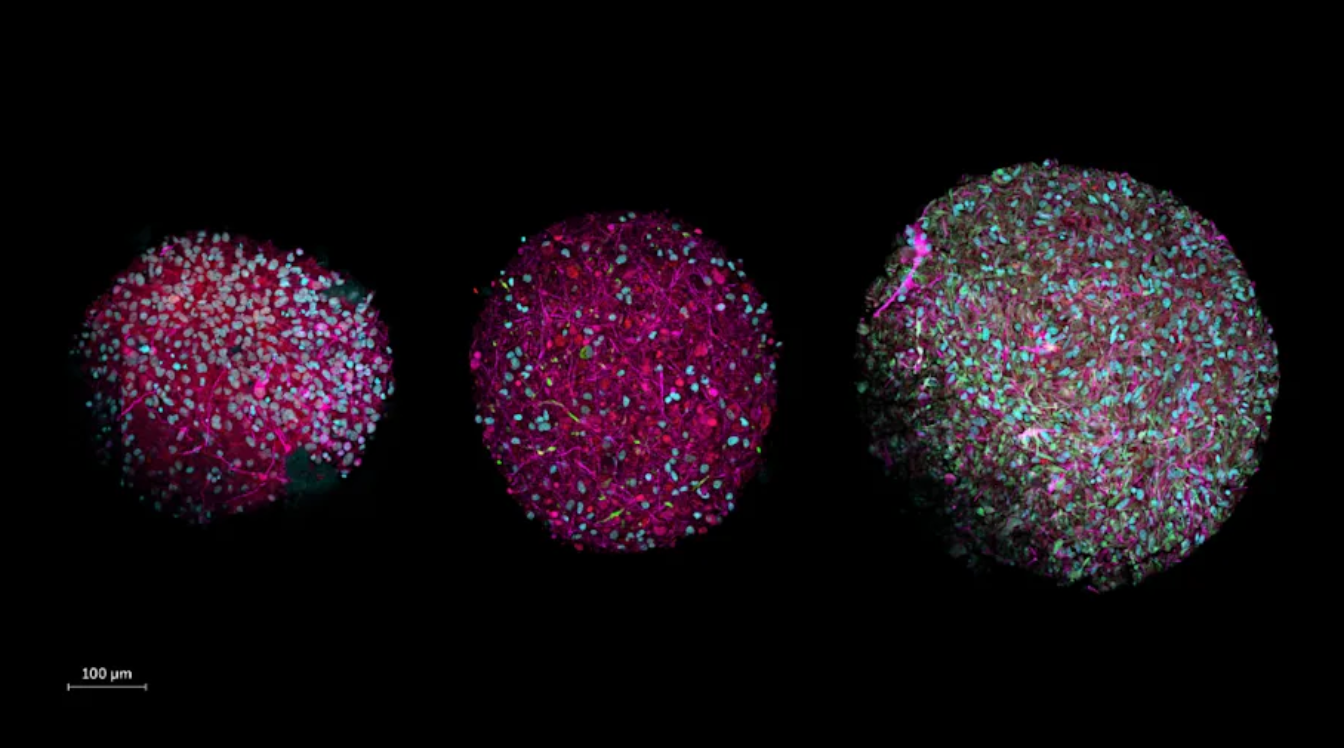

- MedGemma 4B can generate accurate radiology-style reports from medical images. In evaluations, board-certified radiologists deemed 81% of generated chest X-ray reports sufficiently accurate for patient management, placing it among the best in its class.

- MedGemma 27B (Text) achieves 87.7% on MedQA, a USMLE-like benchmark, while remaining far more cost-efficient compared to larger, proprietary models.

2. Advanced Clinical Reasoning & Efficiency

The 27B variants (both text-only and multimodal) display robust clinical reasoning, data retrieval abilities, and a deep understanding of medical language. They’re competitive with larger, closed-source models and, in some cases, approach their performance at a fraction of the compute cost.

3. Lightweight & Accessible

- The 4B and MedSigLIP models can be deployed on single GPUs — and even adapted for mobile devices.

- MedSigLIP is a compact image-and-text encoder trained for classification, retrieval, and structured imaging tasks, ideal for fast and efficient applications.

4. Open, Customizable & Privacy-Preserving

Unlike API-based models, MedGemma’s open nature allows:

- Running on local or institutional hardware, addressing compliance and privacy needs.

- Fine-tuning and adaption for unique healthcare workflows.

- Prompt engineering and agentic orchestration with other systems (e.g. FHIR interpreters, search, or speech modules).

5. Use Case Versatility

MedGemma’s applicability spans multiple domains:

- Radiology report generation and visual question-answering.

- Clinical summarization, SOAP note writing, triage support.

- Diagnosis assistance via image-text consistency checking.

- Synthetic data creation for training and education.

- Multilingual patient communication and global health outreach.

Example implementations include automating pre-visit data gathering via Hugging Face-hosted demos, as well as chest X-ray triage improvements using MedSigLIP.

Why MedGemma Matters

- Democratizing Healthcare AI

MedGemma makes high-quality medical AI accessible to researchers, startups, and clinics without massive licensing fees. - Transparency and Trust

Open-source models allow the community to examine, critique, and improve their capabilities — fostering trust, safety, and innovation. - Accelerating Research & Innovation

With fine-tuning, prompt engineering, and efficient deployment, MedGemma lowers the barrier to experimentation in diagnostics, triage, and patient communication. - Alignment with Ethical AI Principles

The HAI-DEF framework ensures responsible use, with model cards and usage policies guiding developers in rigorous validation before clinical deployment.

Considerations & Cautions

Despite its strengths, developers should treat MedGemma as a foundational research tool, not a clinical-grade product. Real-world usage requires:

- Rigorous validation on target data and workflows.

- Adherence to institutional and regulatory guidelines.

- System designs incorporating human-in-the-loop oversight, safeguards against hallucinations, and accountability mechanisms.

Getting Started with MedGemma

- Explore the [MedGemma technical report] and the [model card] for architecture, training data, and benchmark details.

- Access the models via Hugging Face or Google Cloud platforms.

- Experiment with MedGemma 4B for multimodal prototypes, or 27B models for advanced text reasoning.

- Leverage MedSigLIP for structured image-based tasks like classification or retrieval.

- Join the HAI-DEF community for collaboration, feedback, and shared learning.

Conclusion

Google DeepMind’s MedGemma empowers a new wave of healthcare AI development by combining generative multimodal capabilities with accessibility, flexibility, and transparency. Whether generating reports from images, helping triage patients, or summarizing clinical data, MedGemma equips innovators with tools once gated behind proprietary walls. As the world strives toward equitable healthcare, open medical AI like MedGemma can help bridge gaps, spark research breakthroughs, and ultimately support better patient outcomes.

Refer to other News on AI here.